AI is changing the world and it certainly has impact on research design and workflow. Today we look at some AI research assistants, which are trained to answer research questions based on corpus of scientific literature.

Automating Research Workflow in Elicit

The first AI research assistant that we’d like to introduce is Elicit. Since its release in 2021, it has rapidly gained a lot of attention for its capabilities in using GPT-3 language models to automate research workflow, such as understanding queries, extracting and summarizing a paper’s abstract, methodology, and key findings in plain language.

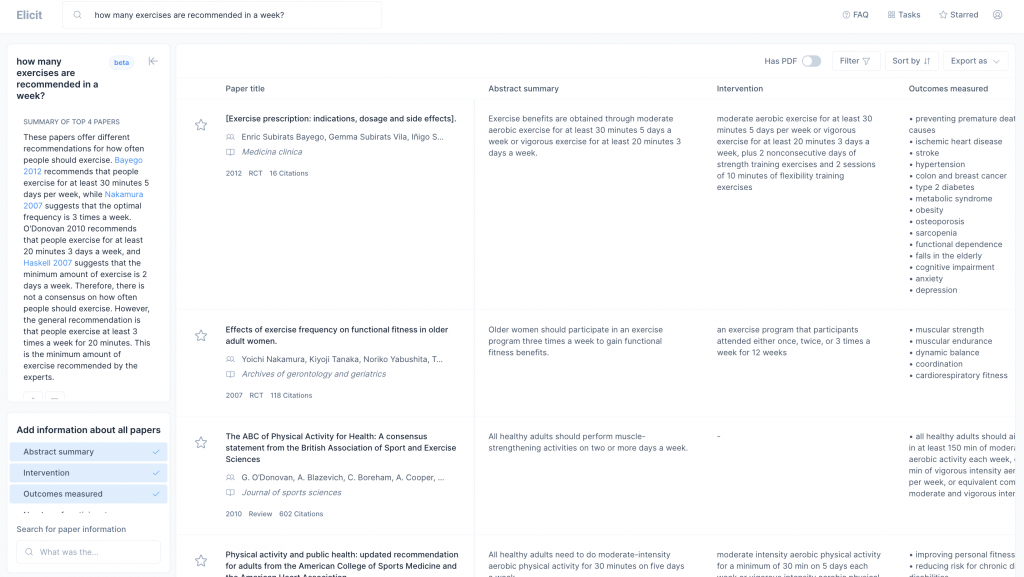

When receiving a question, Elicit gets back answers from a list of papers. Furthermore, recently it started to automatically generate a summary (in beta) of the top 4 papers in a blurb.

Elicit answers a question by looking for relevant papers and gives a summary of the top 4 papers (left-hand side)(Link)

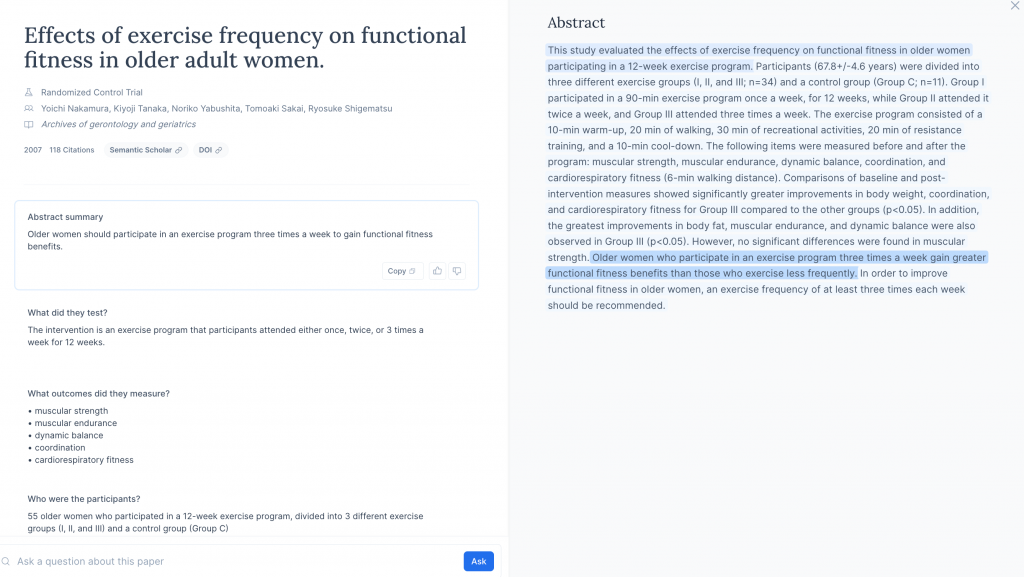

Clicking on any of the papers in the table, we can get to see summaries to components of the paper such as “abstract, “participants”, “outcomes”, with the original text displayed side-by-side. Key statements in the original content are highlighted in blue for users’ easy comparison.

Elicit summarizes key elements of the research at paper level.

I was very amazed at how readable and grammatically correct the summary was when I first used the feature. How is this achieved? Elicit describes the general process in an FAQ:

- You enter a question.

- Elicit searches for results from the Semantic Scholar API and retrieve top 1,000 results.

- Elicit (re)ranks retrieved papers based on the relevance to your query, using several GPT-3 language models.

- For each paper, Elicit also retrieves title, abstract, authors, citations, and a few other bits of metadata from Semantic Scholar, and the PDF link from Unpaywall.

- Elicit returns relevant papers, shown one per row in the results.

In addition to the “literature review” feature, Elicit curates a list of “Tasks” that it can do to enhance the research workflow, such as brainstorm research questions and suggest search terms. Try them out.

“Ask A Question” in Scite.ai

A similar “Ask Me Question” feature is recently added to Scite.ai as well. Still in its early stage (beta), scite can only take simple questions in plain language. When receiving a question, scite will search across its database of over 1.2 billion citation statements, which are indexed from over 32 million full-text articles, to surface relevant answers. From the screen cap below, we see the top results pretty nicely answered the question “how many hours of sleep are recommended per day?”

Scite.ai gives quite clear answers to the question “how many hours of sleep are recommended per day?”

To those of you who are new to the term “citation statement”, it is the textual context from a paper where it uses references in-text. The HKUST Library has full subscription to scite.ai, whose key feature is to let you see how papers are being cited (e.g., to support, contrast, or to mention a previous claim) clearly through the bolded citation statements in the full-text.

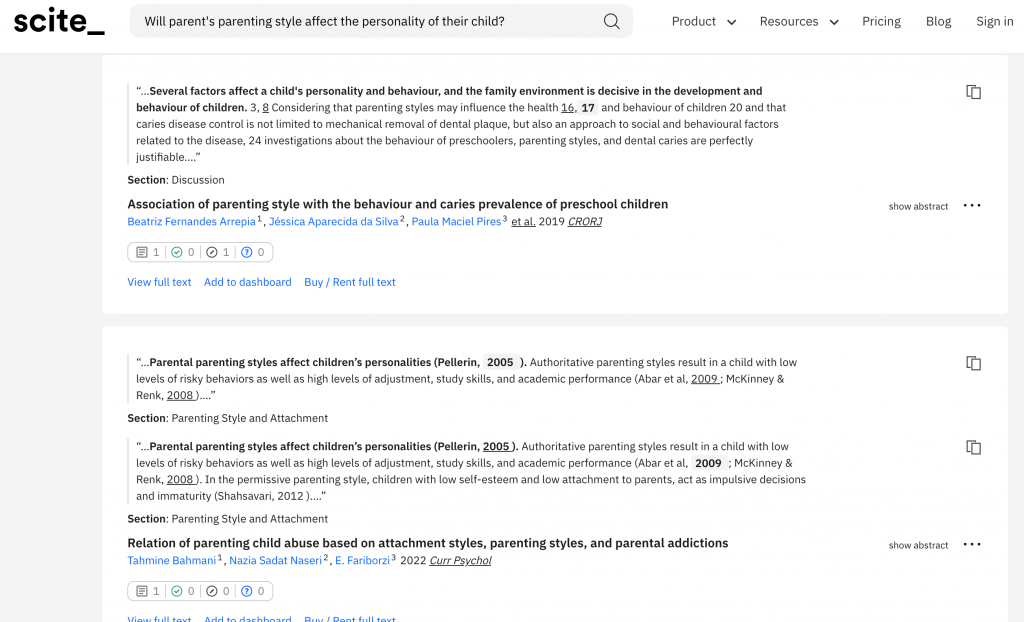

As a feature in its early development, when presented with questions that are a bit more complex (for example, “will parent’s parenting style affect the personality of their child?”), the results given by scite.ai will look quite much like a standard “paper search”, rather than giving us direct or ready-to-use answers to the question.

Scite cannot effectively answer questions that are a bit more complex, such as “will parent’s parenting style affect the personality of their child?”.

The “Dangerous” Galactica

Other than Scite and Elicit, which are developed by small start-up companies and teams, big tech companies such as Meta are also developing AI tools that aim to solve the “information overload” challenges in scientific research. Galactica, co-developed by AI researchers from Meta with open-source ML community Papers with Code, is a large language model that can store, combine and reason about scientific knowledge. However, its first release only survived three days. After testing, several renowned experts publicly shared their concerns and criticised the tool for being “statistical nonsense,” “dangerous” and will “usher in an era of deep scientific fakes.” As a result, it was pulled from public use on 21 Nov.

When using any search tools built with AI language models, we should be aware of their limitations which could introduce systematic errors and biases (take this FAQ from Elicit for example). In order to understand and evaluate whether a search tool is reliable and trustworthy, we should check the developer information, understand what’s the underlying data used for the language models, and their methods and business models – These also echo what Mr. Aaron Tay (Data Service Librarian at SMU) shared in his recent talk to HKUST, which talked about how the availability of open data and open metadata are transforming the academic world and how scientists do research.

Links & Sources

Check on the links and resources below if you’re interested to learn more on this topic.

- Elicit: Language Models as Research Assistants

- Elicit mailing list

- Why Meta’s latest large language model survived only three days online

- Update: Meta’s Galactica AI Criticized as ‘Dangerous’ for Science

– By Jennifer Gu, Library

Views: 1039

Go Back to page Top

- Category:

- Academic Publishing

- Research Tools

Tags: elicit.org, galactica, GPT-3, machine learning, meta, research workflow, scite.ai

published November 25, 2022

last modified January 22, 2024