If you haven’t heard or read about ChatGPT, your mind may be blown after watching this short video.

This is ChatGPT, an AI chatbot recently released by OpenAI. Pretty cool right? You can simply give it an instruction or ask a question, and it generates human-like responses. You can also ask follow-up questions, like in a conversation.

AI chatbots are no longer new these days, so what makes this one so special? In fact, this little chatbot can do much more: it can brainstorm ideas, write blogs, poems, music chords, or even generate programming codes, in a decent way. Since its launch, ChatGPT has attracted over a million users, and the internet is now flooded with tons of interesting (and funny) examples.

At the core of ChatGPT is the GPT-3 model, a very powerful large language model (LLM) that has been trained on a wide range of text data, including books, Wikipedia, and other common webpages.

This sparked our curiosity:

| Can this tool help researchers write scholarly articles? |

In this (a bit longer) post, we will walk you through some experiments with ChatGPT. By the end, we hope you can have a better understanding of this tool, and have your own answer.

An Exploratory Journey with ChatGPT

As part of the exploration process, let’s first see what this chatbot “thinks” about AI writing scholarly articles.

Experiment 1. “Can AI write scholarly articles?”

Type in the question and this is what we got:

What if we ask the same question again?

We have all experienced some form of AI writing in life, such as the auto-complete feature in search engines or the predictive text in our emails. By looking at the above examples, we may share the same feeling: this one is much more powerful. It can formulate a paragraph in a pretty coherent way, with no grammatical errors, and the answer seems quite sensible too.

While from these two answers, we do learn some facts about AI writing:

- AI is trained on existing research that was fed into the “training” system. It is not capable of generating original ideas or conducting original research.

- AI may give you a broad overview of a topic, but it cannot be used as primary sources for research.

Now, let’s try something more sophisticated.

Experiment 2. Writing Literature Review

Literature review is an essential part of the research process. It helps researchers to identify what is already known about a topic and what gaps in knowledge exist. In this experiment, let’s see if ChatGPT can write an overview of existing research on a given topic.

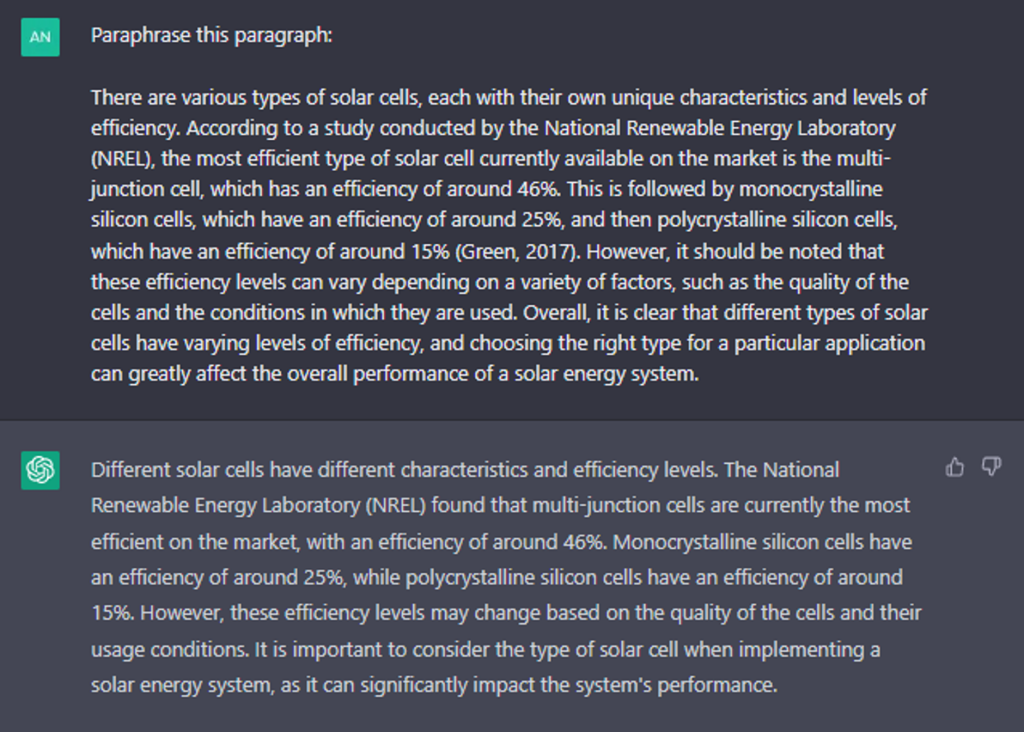

Assume I am researching a new type of material for solar cells and I want to learn how other types of solar cells perform in terms of their efficiency of electricity generation.

Here’s my prompt:

| Write a short paragraph in 300 words for an academic paper comparing the efficiency of different types of solar cells. Include citations and references in APA style. |

And this is what the chatbot wrote:

Honestly, this was not my first attempt, as the machine seemed not to intend to include any citations to the sourced materials unless I clearly instructed it to do so.

From this answer, we could see its ability to generate text with citations, with a fairly accurate APA format (except that the journal title should be in italics). The paragraph was quoting a research article “Solar cell efficiency tables”, which is indeed a credible source to check confirmed efficiencies for solar cells and their modules.

However, after looking into the source article, we quickly spot some problems:

- Outdated source: The article Solar cell efficiency tables (version 48) was published in 2016. In fact, this table has been constantly updated, and the latest version is Solar cell efficiency tables (version 60), published in 2022. (Note that both articles are openly available online.)

- Incorrect quotes: Even in version 48, the highest efficiency for multi-junction cells was reported by Soitec, CEA and Fraunhofer ISE, not NREL. In addition, as listed in the source article, many other types of cells achieved an efficiency over 25%, so the statement “followed by monocrystalline silicon solar cells, which have an efficiency of around 25%” is not true.

- Fake citation: the APA citation format looks okay, but the information was fake:

What the chatbot gives:

| Green, M. A. (2017). Solar cell efficiency tables (version 48). Progress in Photovoltaics: Research and Applications, 25(9), 1211-1216. |

The correct citation should be:

| Green, M. A., Emery, K., Hishikawa, Y., Warta, W., & Dunlop, E. D. (2016). Solar cell efficiency tables (version 48). Progress in Photovoltaics: Research and Applications, 24(7), 905–913. |

Even it’s quoting the version published in 2017, the citation should be:

| Green, M. A., Hishikawa, Y., Warta, W., Dunlop, E. D., Levi, D. H., Hohl‐Ebinger, J., & Ho‐Baillie, A. W. H. (2017). Solar cell efficiency tables (version 50). Progress in Photovoltaics: Research and Applications, 25(7), 668–676. |

From these findings, we can learn that:

- ChatGPT can give you incorrect information, which again cannot be used as primary sources for research. Even though its landing page warns users that ChatGPT “may occasionally generate incorrect information” and “occasionally produce harmful instructions or biased content”, it can be challenging for researchers to distinguish the truth from the false information, as the chatbot tends to express them with equal confidence and style. We also notice that some platform has temporarily banned the content generated by ChatGPT, because “the rate of being incorrect is high”.

- ChatGPT is not able to correctly interpret scientific findings. The response quoted information that was not actually said in the study, with wrong citation and reference. At this stage, it is still lacking human intelligence such as the abilities of critical thinking and reasoning.

- Currently, ChatGPT is not able to capture the latest research, because

- it has “limited knowledge of world and events after 2021” (also mentioned on its landing page),

- it is not able to browse the internet to get the latest information, and

- it is not trained on scientific papers. In fact, there are already language models, such as BioBERT, that are trained on research papers, but they are relatively small and focus on a specific domain.

Experiment 3. Paraphrasing

Paraphrasing refers to using one’s own words to convey others’ ideas in the writing, which helps avoid plagiarism and ethically present the work of others. It is a crucial skill in academic writing.

Let’s use our previous demo examples and see if the chatbot can help paraphrase these two paragraphs.

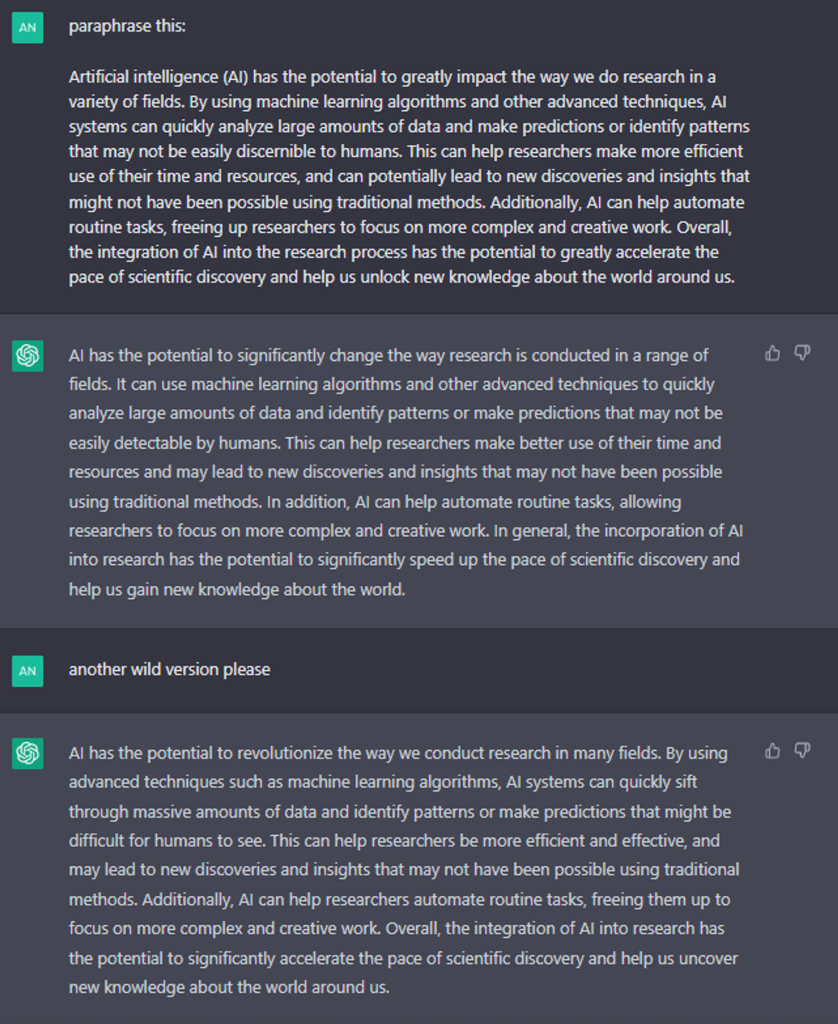

E.g.1 – Paraphrase the paragraph about solar cell efficiencies

E.g.2 – Paraphrase the paragraph about AI

From these results, we can see that:

- ChatGPT is trying to express the same idea in a different way but the change was very mechanical – simply replacing some words without significantly altering the sentence structure or the order of information. Paraphrasing of this form can be considered plagiarism.

- Rerunning the instruction produces a new but very similar result.

Paraphrasing involves comprehension and synthesis of the original text, and then recreating it in a way that fits into the writer’s context and writing style. GPT-3 model, which powers this chatbot, is still weak in text synthesis and creative writing (as discussed in the GPT-3 paper), both of which are central to human intelligence.

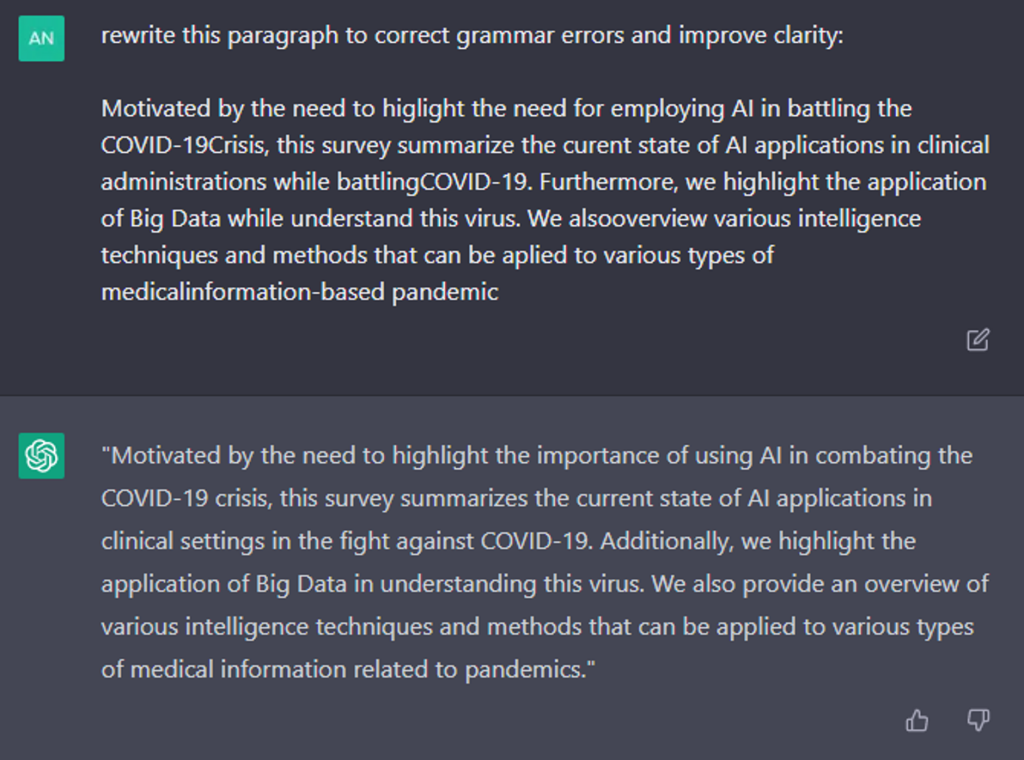

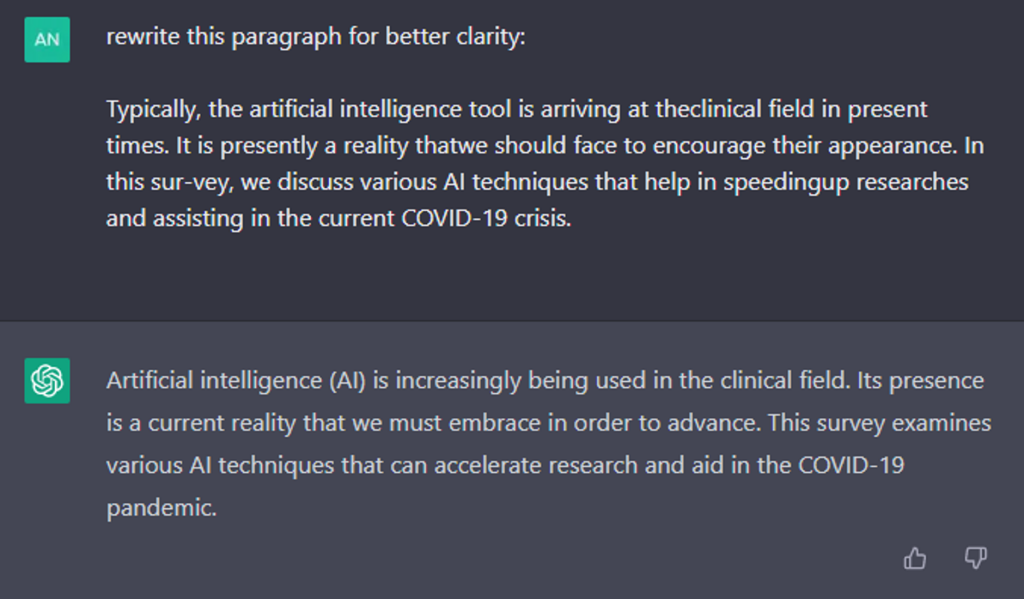

Experiment 4. Grammar and Typo Checking

AI grammar checking assistants have been around for a while. They are skilled at correcting grammatical mistakes and typos, and suggesting alternative words. Some paid services can even help improve sentence structure and clarity in writing. In the last experiment, let’s test if ChatGPT can do a better job in this area.

Here we picked two paragraphs from this poorly written paper, initially published by the journal IEEE Access and then retracted. We also intentionally introduced a few typos in these paragraphs to see how the tool would perform.

E.g.1 – Fix grammatical errors and typos

E.g.2 – Rewrite for better clarity

We are happy to see some promising results. The writing has been significantly improved. Not only was ChatGPT able to fix basic errors, but it was also able to reorganize loosely connected sentences into a more cohesive and understandable form (as seen in the second example). This can be useful for proofreading papers and enhancing the clarity of writing by identifying and correcting errors.

Concerns on Academic Integrity

Since its launch, some academics have expressed concerns that ChatGPT could pose a threat on academic integrity. To prevent academic plagiarism and mass generation of propaganda, OpenAI scientists have been working on developing “watermarks” for GPT-3 generated text. Anti-plagiarism tools are being developed to detect articles written by ChatGPT. One user tweeted “We’re witnessing the death of the college essay in realtime”. A college English professor, however, does not seem as worried, stating in this recent post that ChatGPT still lacks the ability to “address precise or local issues, generate an original argument, or interrogate other arguments rather than just citing them, which are all key aspects of effective essay-writing.” This is also supported by our own experimental results. Some scholars believe that rather than strictly prohibiting the use of these tools, we should embrace them and design better assessments that incorporate AI-generated text. This would be a more sustainable approach in the long run.

Summary

It is inevitable to encounter AI emerging tools in this fast changing world. Understanding how they work can help us use them wisely and more responsibly. We hope the experiments in this post help achieve this goal.

| While GPT-3 makes many basic mistakes, we are seeing glimmers of intelligence, and it is, after all, only version 3. Perhaps in twenty years, GPT-23 will read every word ever written and watch every video ever produced and build its own model of the world. This all-knowing sequence transducer would contain all the accumulated knowledge of human history. All you’ll have to do is ask it the right questions. (AI 2041, p.116) |

As an academic librarian, I am an advocate of open access and open science, and I am excited about the recent development in open metadata, which makes the research literature more discoverable and accessible. Aaron Tay from SMU pointed out in his recent post that one benefit that is sometimes overlooked is that “open access is not just for humans, but also for machines.” With the power of GPT and more advanced language models in the near future, we are seeing tremendous possibilities of transforming world’s knowledge into actionable insights that can benefit not only research, but all humanity.

Note 1:Unfortunately ChatGPT is currently not available in Hong Kong, so users need to access through VPN.

Note 2: ChatGPT is still learning and evolving. During the testing period, we have seen new features being added, such as the chat history, and the change in the responses which now sound more “humble”. So users may expect a different interface and results now and then. As a disclaimer, the author of this post also used ChatGPT to proofread the text and incorporated its suggestions.

– By Aster Zhao, Library

Views: 6591

Go Back to page Top

- Category:

- AI in Research & Learning

- Research Tools

Tags: AI Assistant, ChatGPT, GPT-3, OpenAI, scholarly writing

published December 23, 2022

last modified January 22, 2024