Research Bridge

AI in Academic Publishing: A Call for Ethical Practices

Academic Publishing

AI in Research & Learning

In an unprecedented move, nearly all editors of Elsevier’s Journal of Human Evolution (JHE) resigned in late 2024, protesting the unchecked adoption of AI tools, steep publishing fees, and ethical erosion in academic publishing. As researchers, we face an urgent question: How can the scholarly community harness AI’s potential without compromising the integrity of human-driven research?

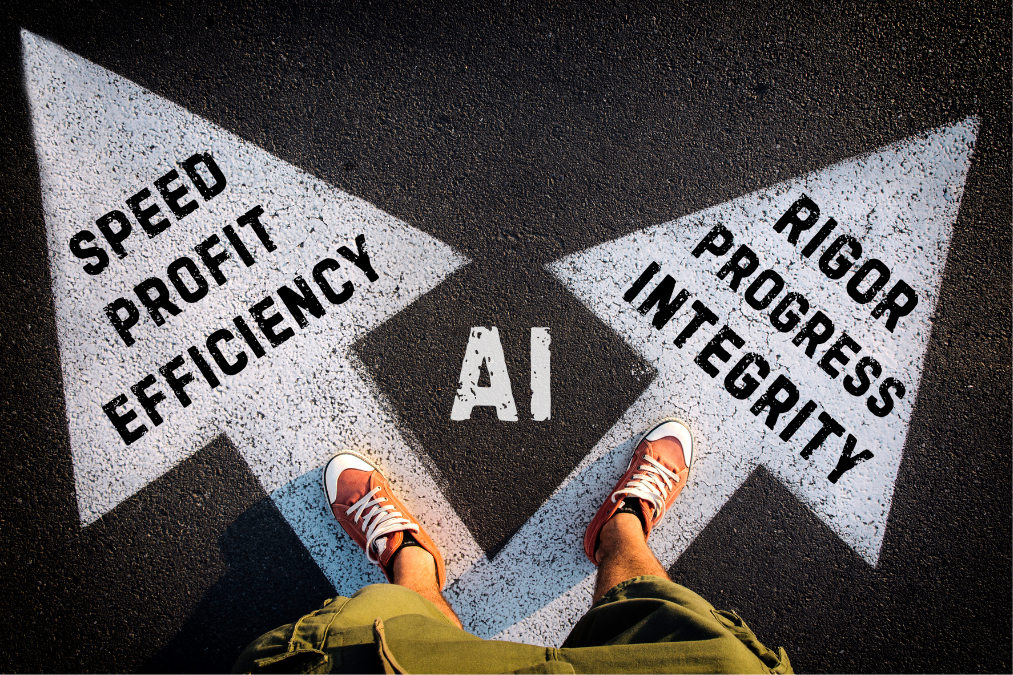

Efficiency vs. Integrity: The AI Dilemma

AI tools offer significant potential to streamline academic workflows through automated peer review, plagiarism detection, and manuscript editing. However, their misuse or over-reliance raise serious concerns. Researchers and editors warn that AI-generated content can dilute the quality of research, introduce errors or oversights that human experts would catch.

Moreover, AI tools often lack the nuance and contextual understanding that human editors and reviewers provide. A seasoned editor can recognize innovative methodologies and nuanced arguments within their field—capabilities that AI has yet to master. The “black box” nature of AI algorithms obscures how decisions are made, further undermining trust in the integrity of published work.

Profit Over Progress: The Commercialization Crisis

The JHE resignations also spotlight a systemic issue: the tension between profit motives and scholarly values. AI-driven “rapid review” systems may prioritize speed over rigor to meet profit targets. Meanwhile, the high processing fees charged by publishers often limit access to research, creating barriers for both authors and readers.

The resigning JHE editors argued that Elsevier’s AI-driven cost-cutting measures prioritized profit over academic integrity. By reducing human editorial oversight, publishers risk diminishing research quality and credibility. This growing commercialization of publishing underscores the need for ethical safeguards in AI integration.

A Call for Transparency, Accountability & Ethics

To address these issues, research community must establish clear guidelines for responsible AI integration in academic publishing, ensuring that it complements, rather than replaces, human expertise. While AI can enhance efficiency, its implementation must be guided by accountability, transparency, and ethical principles.

- Accountability as foundation: Develop ethical frameworks governing AI use in publishing, e.g. require “AI Impact Statements” from journals adopting these tools.

- Transparency in implementation: Not just authors, publishers and journals must also disclose how AI tools are used (e.g. in peer review or editing process). Editors must mandate human oversight; no algorithm should alter content without expert verification.

- Equity in outcomes: Channel AI-driven cost savings into reduced publishing fees; these savings should directly benefit authors and readers, not just publishers. The research community should also support transitions to non-profit publishing models, following successful examples like NeuroImage’s 2023 shift to Imaging Neuroscience.

Why This Matters for Your Research

These conflicts aren’t abstract—they directly impact what gets published, how it is framed, and who can afford to participate. As you choose where to submit:

- Scrutinize journal’s AI policies

- Advocate for open and transparent peer review

- Support initiatives led by editors to reform publishing ethics

The JHE resignation isn’t an endpoint, but a catalyst. By demanding ethical AI use and equitable access, researchers can reclaim academic publishing’s core mission: disseminating knowledge, not corporate revenue growth.

More Readings

- Journal editors’ mass resignation marks ‘sad day for paleoanthropology’ (Science, 9 Jan 2025)

- Evolution journal editors resign en masse [UPDATED] (Ars Technica, 31 Dec 2024)

- Evolution journal editors resign en masse to protest Elsevier changes (Retraction Watch, 27 Dec 2024)

Edited By

Kevin Ho & Aster Zhao, Library

Published

14 Feb 2025

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Library Newsletter

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Library Newsletter

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Library Newsletter

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Next News

Library Newsletter

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Library Newsletter

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Next News

Library Newsletter

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Library Newsletter

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Library Newsletter

Next News

Research Bridge

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Next News

Library Newsletter

Next News

Next News

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Library Newsletter

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Research Bridge

Next News

Library Newsletter

Research Bridge

Next News

Research Bridge

Library Stories

Next News

Library Stories

Research Bridge

Next News

Library Stories

Next News

Library Stories

Next News

Library Stories

Research Bridge

Next News

Library Stories

Library Stories

Next News

Library Stories

Next News

Next News

Library Newsletter

Research Bridge

Next News

Library Stories

Next News

Library Stories

Research Bridge

Next News

Library Stories

Library Stories

Next News

Research Bridge

Library Stories

Next News

Next News

Research Bridge

Library Stories

Next News

Library Stories

Next News

Library Stories

Next News

Library Newsletter

Library Stories

Next News

Library Stories

Research Bridge

Next News

Research Bridge

Next News

Research Bridge

Library Stories

Next News

Library Stories

Research Bridge

Next News

Library Stories

Research Bridge

Next News

Library Stories

Library Stories

Next News

Library Stories

Next News

Research Bridge

Library Newsletter

Next News

Library Stories

Research Bridge

Next News

Library Stories

Research Bridge

Next News

Library Stories

Next News

Research Bridge

Library Stories

Next News

Research Bridge

Research Bridge

Next News

Library Stories

Next News

Next News

Library Stories

Next News

Library Stories

Library Stories

Next News

Research Bridge

Library Stories

Next News

Research Bridge

Next News

Library Stories

Library Newsletter

Next News

Library Stories

Next News

Library Stories

Research Bridge

Next News

Library Stories

Next News

Library Stories

Next News

Library Stories

Research Bridge

Next News

Library Stories

Library Stories

Next News

Research Bridge

Library Stories

Next News

Library Stories

Next News

Library Stories

Next News

Library Stories

Library Stories

Next News

Library Newsletter

Library Stories

Next News

Research Bridge

Library Stories

Next News

Library Stories

Next News

Research Bridge

Library Stories

Next News

Library Stories

Next News

Research Bridge

Next News

Library Stories

Research Bridge

Next News

Library Stories

Library Stories

Next News

Library Stories

Library Newsletter

Next News

Research Bridge

Library Stories

Next News

Library Stories

Next News

Next News

Library Stories

Next News

Next News

Research Bridge

Next News

Next News

Library Newsletter

Library Stories

Next News

Library Stories

Research Bridge

Next News

Next News

Library Stories

Research Bridge

Next News

Library Stories

Library Stories

Next News

Research Bridge

Library Stories

Next News

Research Bridge

Library Stories

Next News

Research Bridge

Library Stories

Next News

Library Stories

Research Bridge

Next News

Library Stories

Next News

Next News

Library Stories

Library Stories

Next News

Library Stories

Next News

Library Newsletter

Library Stories

Next News

Research Bridge

Next News

Library Stories

Library Stories

Next News

Library Stories

Next News

Research Bridge

Library Stories

Next News

Library Stories

Next News

Library Stories

Library Stories

Next News

Library Newsletter

Next News

Library Stories

Research Bridge

Next News

Library Stories

Next News

Research Bridge

Library Stories

Next News

Next News

Library Stories

Library Stories

Next News

Library Stories

Library Newsletter

Next News

Research Bridge

Library Stories

Next News

Research Bridge

Library Stories