Conducting literature reviews can be a daunting and time-consuming task. Last week, HKUST Library offered a workshop introducing the latest AI tools that can assist in this process. This post shares the key highlights from the workshop.

Understanding LLMs & Tools for Research

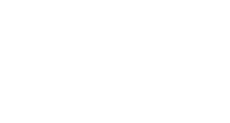

While the workshop focused on AI tools designed for research, it is essential to understand the capabilities and limitations of the large language models (LLMs) that power these tools. Currently, the most advanced LLMs include GPT-4 from OpenAI, Claude-3-Opus from Anthropic, and Gemini-1.5-Pro from Google. GPT-4 models excel at reasoning and coding tasks, while Claude models are known for their human-like writing and large context lengths. The newly released Gemini-1.5-Pro models look promising in their capacity to process even larger amounts of text - up to 1 million tokens (~750k words). When choosing between these models, researchers should consider each one’s context length limitations and be aware of the computational cost (“credits”) required for generating answers, especially when working with long input.

Poe.com, a popular AI tool in Hong Kong due to its accessibility, offers a wide range of LLMs on its platform, including both free and paid, proprietary and open-source options. The platform currently hosts over 50 “bots”, including the latest GPT-4, Claude-3 and Gemini models, many of which are free to use (Figure 1). A full comparison of these bots in terms of their context length, knowledge cut-off dates, and credits used can be found in our course guide.

Fig 1. Some bots available on Poe.com (as of 12 April 2024). Image extracted from workshop slides.

AI Tools for Literature Review

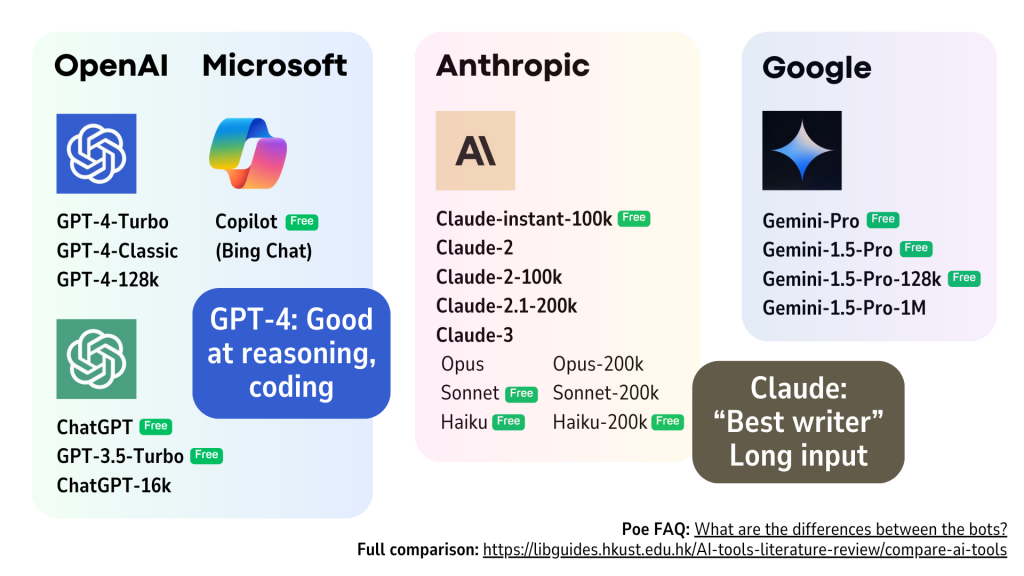

The workshop divided the literature review process into three broad stages: 1) Developing research questions, 2) Literature searching and review, and 3) Writing the review. Figure 2 provides an overview of potential AI tools that can assist with research activities at different stages of this process. While AI tools can be helpful, we still need the “traditional” research tools, such as scholarly databases for finding articles and reference management tools for organizing citations.

Fig 2. Overview of GenAI tools for Literature Review.

Key Highlights:

Brainstorming Topics or Directions

One of the most exciting capabilities of GenAI tools is to generate ideas. Tools like Poe (both GPT-3.5 and GPT-4 bots) and Copilot (formerly Bing Chat) can help brainstorm potential research topics by providing detailed suggestions based on prompts. A typical example would be:

| Prompt: I want to study the impact of GenAI in scholarly publishing. Suggest some directions. Result: Chat on Poe (GPT-4) |

Preliminary Literature Search

A preliminary search helps researchers get a quick view of the existing research, identify key concepts and research gaps, and form or refine their research questions or hypotheses. In addition to the traditional discovery tools like Google Scholar and research databases, tools such as Perplexity and Consensus can also be useful.

Perplexity can search across the web or academic sources, providing real sources and brief summaries of the articles. Here’s an example:

| Prompt: I'm researching on how duration of sleep affects weight control. Suggest some research articles. Result: Chat on Perplexity (with Pro Search, limit to latest 5 years) |

Consensus is powerful in categorizing evidence and zooming into the study types, population and outcomes of the sources, which is particularly useful in medical research. See this example:

| Prompt: Does sleeping less gain weight? Result: Chat on Consensus (with Synthesize and Copilot features turned on) |

Building Search Queries

Researchers can sometimes struggle with developing keywords and constructing effective search queries across different databases. AI can help with this task by providing sample search queries. Here’s an example:

| Prompt: I'm looking for research articles related to sleep and weight control in Web of Science. My search query would be like sleep AND "weight control". Can you help me expand the keywords and build a more constructive search query? Result: Chat on Poe (GPT-4) |

In-Depth Literature Search

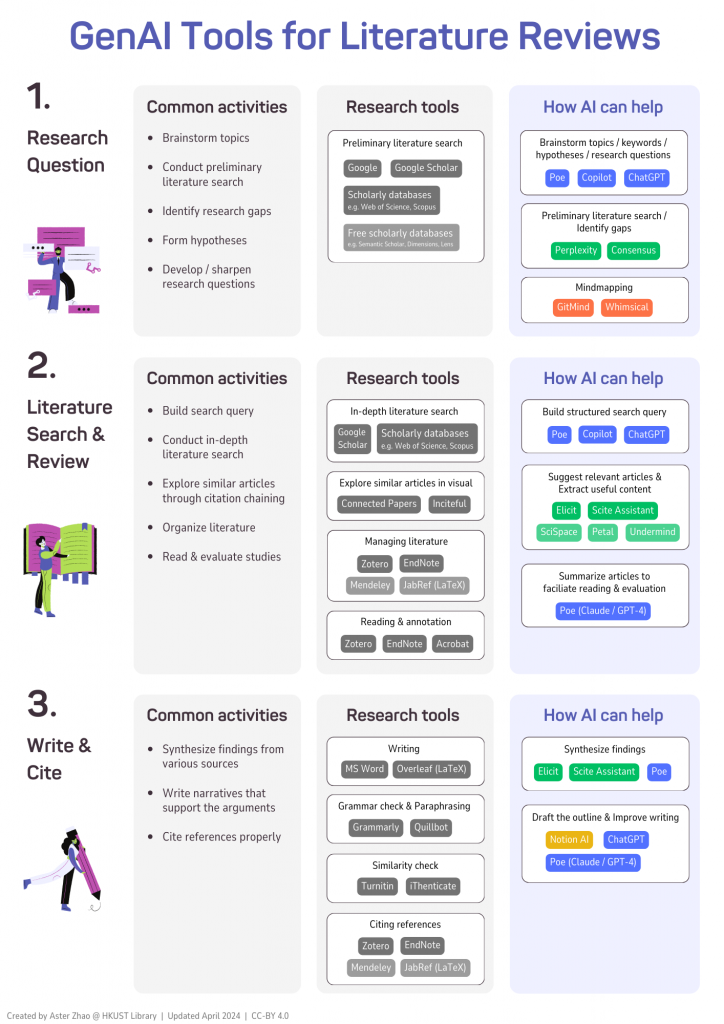

Many AI-powered research tools can suggest relevant articles based on a question and provide summaries of these articles. Scite Assistant, Elicit, and Undermind are highlighted for their their high recall of articles and in-depth analysis of the sources. High recall refers to the ability to retrieve a large number of relevant articles for a given query, minimizing the chances of missing important papers. This is particularly valuable for conducting comprehensive literature reviews.

Scite Assistant is unique in providing context to the citations of articles it retrieves, indicating whether articles are supported, contrasted or mentioned by other studies. It also extracts the associated text that support the claim in the summary. Both of these makes it easier for researchers to evaluate the information and decide whether the evidence can be used in their review. Researchers can use Scite Assistant to find supporting and contrasting evidence for a statement (see example in Figure 3).

Fig 3. An illustration of prompting Scite Assistant to find evidence for a statement.

Elicit is more powerful in generating a matrix of articles from a question input (as well as PDFs of additional articles uploaded if needed), and extract customized information, e.g. create a column to extract the key findings of each article. This streamlines the process of synthesizing literature and allow efficient comparison across various findings. See an example:

| Prompt: Does sleeping less gain weight? Result: Chat on Elicit (need login to see chat) |

Undermind a relatively new tool, claims to be “10-50x better than Google Scholar”. It is designed to conduct exhaustive literature searches, returning 100 articles in the first run, with the capacity to expand further. Each article is assigned with a “matching” score to indicate its relevance to the question. It also categorizes all results into a few subject categories, allowing researchers to navigate the literature more efficiently and focus on specific aspects relevant to their question. See an example:

| Prompt: I'm trying to find research articles to explore the relationship between sleep duration and weight control. I'm more interested in empirical studies rather than review articles. Result: Chat on Undermind |

Summarizing Articles & Writing

Not every paper found needs a full read. A quick summary of the article or a part can help researchers grasp the main ideas and determine whether the article worth's an in-depth review. GenAI tools are good at summarizing articles, either by direct text input or PDF upload. Here’s an example:

| Prompt: Summarize the key findings of this article. upload article PDF Result: Chat on Poe (Assistant) |

GenAI tools are also good at writing, though they will not produce desired results unless enough context is given to the model. The best way in this case is to draft the initial content and then let AI to enhance it. Tools like Claude-3 and Notion AI can be useful for such tasks.

Notion is a note-taking system, while Notion AI enables users to create and edit content right inside the notebook without copy-pasting from another chatbot. The most commonly used function, “Improve writing”, typically makes minor adjustments, like replacements of some words, and the changes sometimes feel mechanical.

For more extensive editing, consider using Claude-3 models, known for their "human-like" writing. Always use different tools to create different versions and compare. You can even ask Claude to help you compare and offer suggestions for improvement.

| Prompt: Improve writing: paste the draft Result: Chat on Poe (Claude-3-Sonnet); Chat on Notion AI Other useful instructions: “Check grammar and typos”, “Rephrase for clarity”, “Simplify sentence structure”, “Keep it concise, make it more coherent” |

Limitations of GenAI Tools for Research

While GenAI tools open up possibilities for assisting with literature reviews, it’s important to be aware of their inherent limitations imposed by their underlying models. To summarize:

- Limited context and updated knowledge: The tools or models are trained on data up to a certain cut-off date, so they may not know the latest developments in your research area.

- Risks for inaccuracies and bias: The models can hallucinate and generate fake citations, especially if they are not trained on scholarly or do not connect to internet. Even those trained on scholarly materials and with internet access may generate citations that do not support the generated claims or statements.

- Lack of originality and in-depth analysis: While AI-generated summaries can provide a quick overview of relevant research, they often lack in-depth critical analysis of the relations of findings and original insights that a human researcher can provide. Furthermore, the text can sound robotic and may be easily flagged for plagiarism.

Key takeaways: Use GenAI tools as a companion, not as your single source of literature searching. Always verify the content before using it. Write your first draft, then use AI to help improve it, rather than having it draft the review for you.

Citing AI in Research

Proper citation of AI tools and AI-generated content is crucial for academic integrity and transparency. Many academic publishers are providing guidance on acknowledging the use of GenAI in scholarly writing, including authorship, disclosure, attribution, and responsibility for the accuracy and integrity of AI-generated content. Although most citation styles do not have specific guidelines for referencing GenAI tools, some style such as APA and IEEE recommend treating them as “Software”, and treating AI-generated content as “Personal communication”. For detailed information, please refer to our course guide.

Final Remarks

The workshop introduced a range of GenAI tools for various tasks involved in the literature review process. The main goal was to help researchers familiarize themselves with these tools, understand their capabilities for different purposes as well as their limitations, so that they can make informed decisions when choosing a tool for a specific task.

Check out our course guide to see PPT slides, recording, and full chats of the demos.

We believe that librarians have a role in equipping our scholars with the knowledge and skills needed to identify suitable research tools and evaluate the content before its use in research. This role continues to be important in the GenAI era, which is why we are offering this workshop. We encourage researchers to experiment with these GenAI tools and discover how they can enhance their literature review process. If you have any questions or need assistance in using these tools, please do not hesitate to reach out to the library.

Disclaimer: The author also welcomes feedback and discussion on the topic or anything related to this workshop. Please do not hesitate the email the author if you notice anything inaccurate.