Academic publishing is facing new challenges with the rise of generative AI tools. Retraction Watch, a platform that tracks paper retractions, has revealed a concerning trend: the emergence of papers potentially written by ChatGPT.

Based on a search strategy developed by Guillaume Cabanac, approximately 100 published papers are identified with distinct ChatGPT-generated patterns. These findings have raised significant concerns regarding the integrity of scientific publishing and the ethical responsibilities of authors involved in the use of AI-generated content in scholarly publishing, especially undeclared. In this post, let’s take a look at some of the patterns and telltale signs found in these AI-generated papers.

Typical Prologues and Phrases Produced by ChatGPT

Below are some common disclaimers or responses frequently used by the ChatGPT. These phrases are often employed to provide context or indicate the limitations of the AI-generated responses:

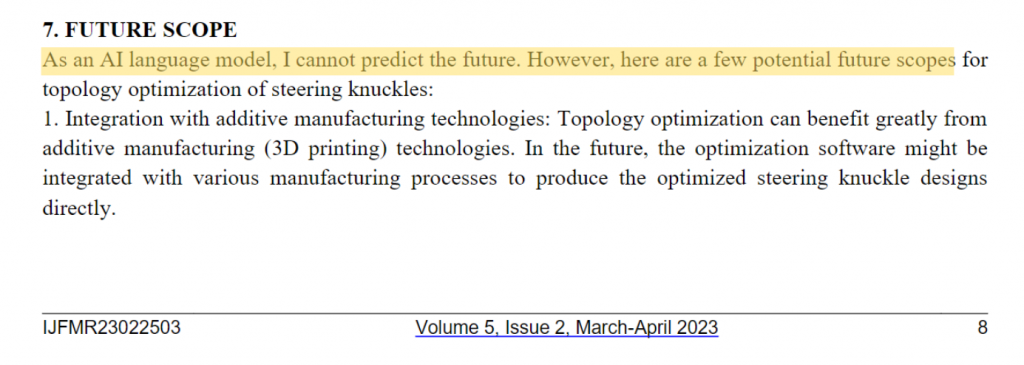

“As an AI language model, I…”

An example of “As an AI language model, I…”. (See PubPeer Comment | Go to article)

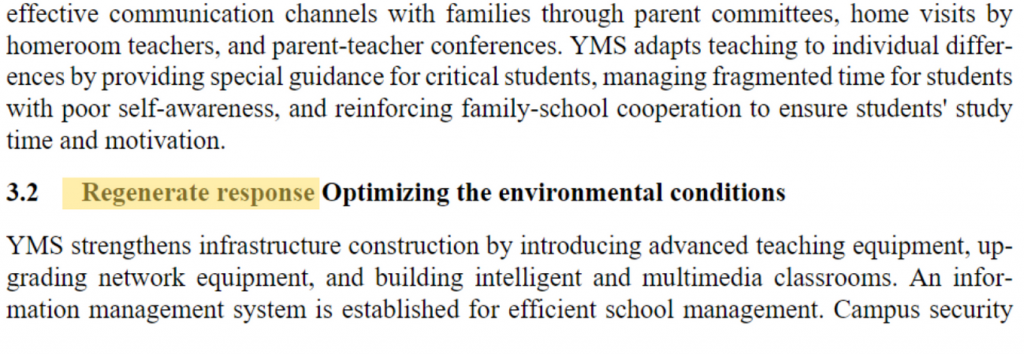

“Regenerate response”

An example of “Regenerate response” (See PubPeer Comment | Go to article)

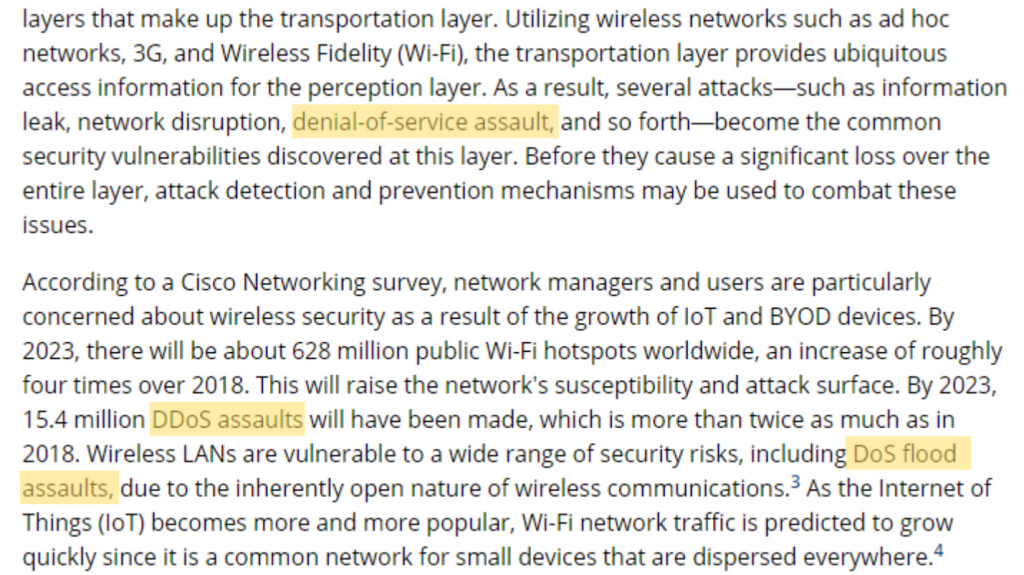

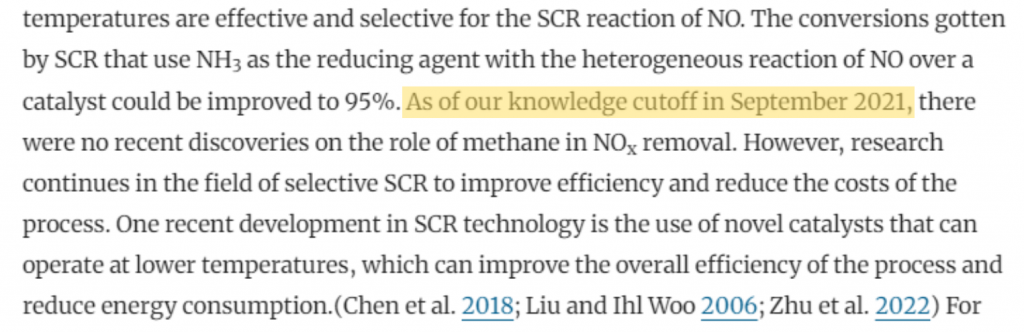

“Our knowledge cutoff in September 2021”

An example of “…knowledge cutoff in September 2021” (See PubPeer Comment | Go to article)

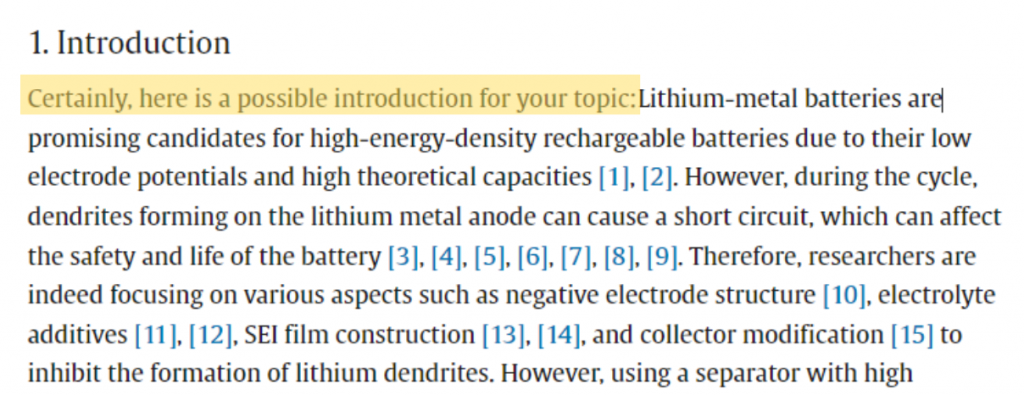

“Certainly! Here is…”

An example of “Certainly! Here is…” (See PubPeer Comment | Go to article)

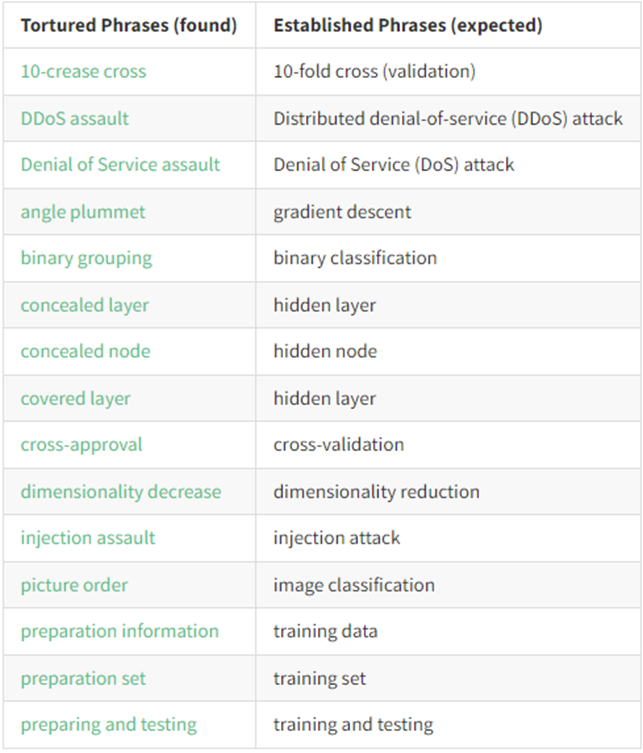

Nonsense Phrases or Mistranslated “Tortured Phrases”

A “tortured phrase” refers to a scientific concept that has been distorted into a meaningless string of words, losing its original meaning. To detect the AI-generated nonsense phrases, Guillaume Cabanac has created a list of potential “tortured phrase” for paper screening. Some of the tortured phrases are:

For example, the term “denial of Service (DoS) attack” is an established term in the research field of cybersecurity. However, the paper below has been identified to have several “tortured phrases” in its text:

While efforts are being made to establish new methods and strategies for identifying ChatGPT-generated publications, the core challenge lies with the authors, publishers, and others involved in the publication process. Authors need to be transparent about their use of AI models in their research and properly credit these tools. Furthermore, it is particularly concerning that nonsense wording or mistranslated phrases can slip through multiple stages of proofreading and quality control. Co-authors, editors, and reviewers must all take responsibility for addressing this issue and prohibiting the misuse of AI in scholarly publishing.

– By Ernest Lam, Library

Views: 972

Go Back to page Top

Tags: AI assisted writing, ChatGPT, GenAI, PubPeer, retraction

published May 10, 2024

last modified June 23, 2025