AI’s ability to quickly generate article summaries from a single question is always fascinating. The paragraphs are often well structured, and many do include real sources. But how accurate is the generated content? Do the sources support their statements? Can this summary be treated as literature review? In this post, we will explore four AI-powered research tools – Scite, Elicit, Consensus, and Scopus AI – and examine their capabilities in summary or review generation.

A Brief Walkthrough of the 4 Tools

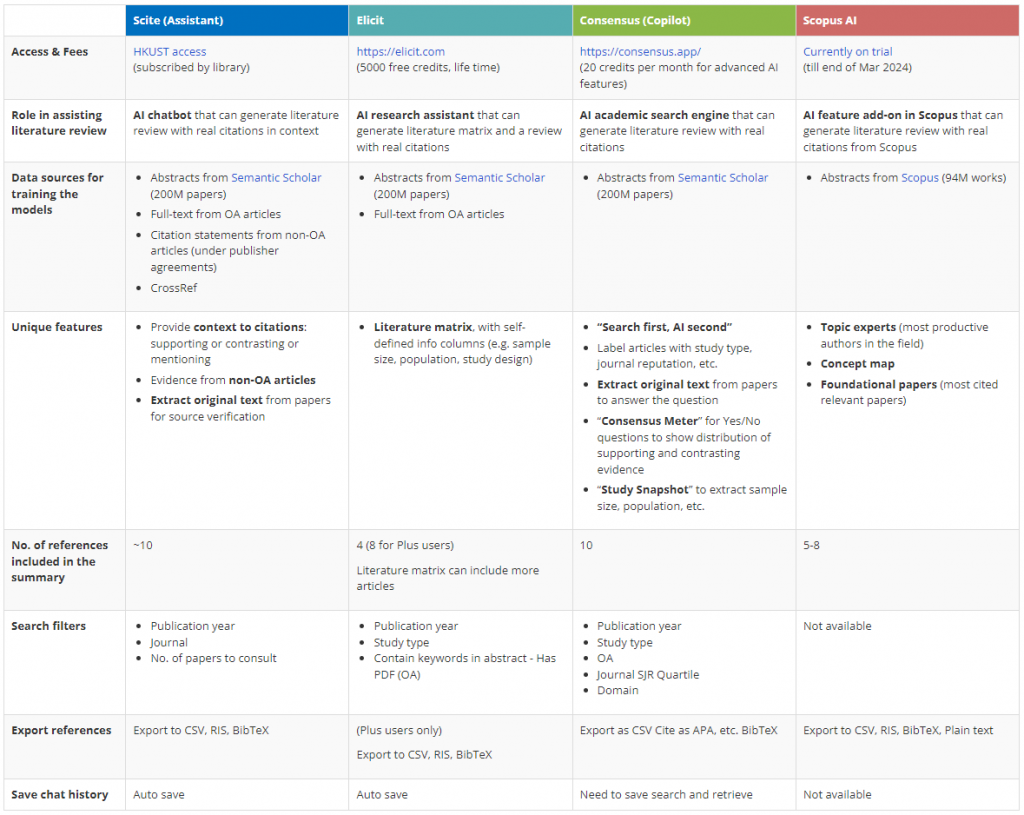

Scite, Elicit, Consensus, and Scopus AI are four AI tools that are currently widely discussed in academia. Unlike general chatbots such as ChatGPT, these tools are powered by models trained on scholarly materials and are specially designed to assist research. They leverage Retrieval Augmented Generation (RAG), a technique which allows them to retrieve answers from external sources such as web or database searches to improve answer accuracy (read our previous posts about RAG here and here).

The primary sources of data for Scite, Elicit, and Consensus are the abstracts from Semantic Scholar, which contains over 200M research papers, and the full text of open access papers. Scite can also obtain citation statements from non-open access articles under specific publisher agreements. Scopus AI relies entirely on abstracts from Scopus, which covers 94M works. Each of these four tools is able to pull out a list of real citations from their respective “pools”, and summarize them into one or a few paragraphs as a “review”.

While the four tools share common features, each has its own focus and unique features.

1. Scite & Scite Assistant

Scite at its core is a tool to contextualize citations, specifically, to distinguish between supporting or contrasting references. Scite Assistant, an AI chatbot on Scite, can generate summaries with real citations.

Key features to assist literature review:

- In addition to providing a link to the source article, it also highlights relevant extracts from original sources that support the arguments when hovering over the citations. This makes it much easier for users to verify the information.

- It provides customization options in searching, such as the ability to limit the publication year range to focus on more recent publications.

HKUST members can access Scite through Library and enjoy full features of the tool.

2. Elicit

Elicit is an AI research assistant designed to streamline research workflows. It has the capability to generate a literature matrix for paper discovery and to produce a summary with real citations. Free account users can retrieve up to 4 papers, while Plus users can retrieve up to 8 papers.

Key features to assist literature review:

- Elicit is most unique for its literature matrix feature, where users can add columns to extract needed information from papers.

- It also allows users to import papers by PDF upload or connecting with Zotero, and do analysis based on these papers.

- It also gives users the option to limit the publication year range.

3. Consensus

Consensus marks itself as the best AI academic search engine. It focuses on searching, and has integrated an AI feature called “Copilot” to enhance the user’s searching experience. Consensus Copilot can also generate summaries with real citations.

Key features to assist literature review:

- Consensus can label articles based on study type (e.g., systematic review, RCT) and journal reputation, allowing users to prioritize their reading. The “Study Snapshot” for each article dives into the article’s abstract, extracting information such as sample size, population, and more.

- It can extract original text from papers, making it easier for users to verify information. For Yes/No questions, the “Consensus Meter” displays a distribution of supporting and contrasting evidence.

- It also gives users the option to limit the publication year range.

- It allows adding additional instruction to the prompt e.g. grouping evidence on both sides.

4. Scopus AI

Scopus is a large multidisciplinary database used for discovering research articles. It has recently incorporated AI feature, namely Scopus AI, which can assist in generating summaries with real citations from Scopus. Currently, Scopus will only retrieve papers published after 2013.

Key features to assist literature review:

Other than the summary, it also provides

- Topic experts – to show most productive authors in the field

- Concept map – a mind map to assist the understanding of the topic

- Foundational papers – the most cited relevant papers

HKUST Library is currently offering a trial to Scopus AI, till end of March 2024.

Evaluating the AI-generated Summaries

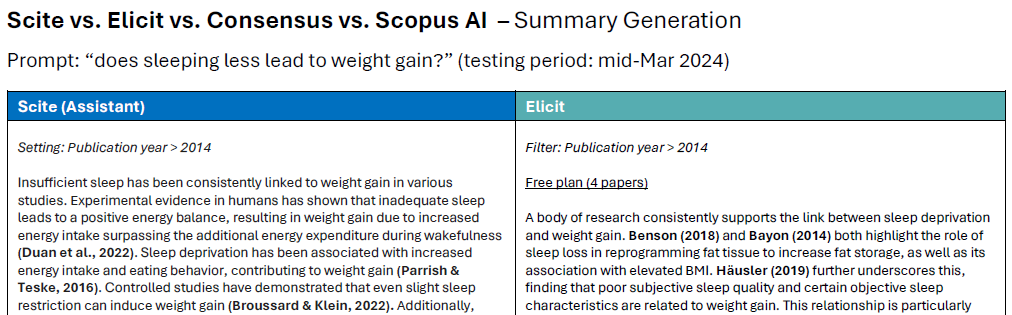

In this section, we used each tool to generate a summary based on the same prompt, “does sleeping less lead to weight gain?”. Results can be viewed in this document.

Length of Summary

- At first glance, we can see that Scite Assistant and Consensus produce longer summaries, averaging 10 citations, while Scopus AI includes about 5-8, and Elicit typically provides 4 (up to 8 for Plus users).

Coverage of Sources

- Scite Assistant, Elicit, and Consensus offer publication year filters, while Scopus AI currently defaults to post-2013 studies.

- We aligned the year range to roughly recent 10 years (2013-2015 to present). Although Scite Assistant, Elicit, and Consensus are trained on the same data source (Semantic Scholar), the retrieved works are not largely overlapped. Scite Assistant found quite different papers compared to the other two. Interestingly, most of the papers identified by Elicit were also found by the other three tools.

Here is a breakdown of the works each tool includes. The document type, publication year, total citations in Google Scholar, Open Access status, and the publisher of the journal are also detailed.

From the table we find that:

- Recency of sources: Scite Assistant covers the latest research up to 2023, while Consensus and Scopus AI include articles until 2022, and Elicit’s sources only extend to 2019.

- OA inclusion: It’s surprising to see all papers Scite Assistant found are OA papers (both gold, bronze and green versions). Consensus has the least number of OA papers. Note that Scite Assistant and Elicit will also look into full text of OA articles, so more OA inclusion could potentially improve the accuracy of the summary.

- Document type: All tools include a number of review articles. Some also include book chapters, short notes, editorials, or even case studies. While review articles are valuable for providing a comprehensive understanding of a topic, relying more on them and including fewer original research articles could limit the range of new empirical data being considered.

- Citation & Journal Source: All tools cover a fair number of highly cited articles, based on Google Scholar citations. However, it’s worth noting that Scite Assistant includes a case study published in a potentially predatory journal.

Depth of Analysis

- Scite Assistant and Elicit provide more structured summaries with in-text citations, while Consensus and Scopus AI focus on key study points without much narrative cohesion. Among the four, Scite Assistant’s summary appears to be the most comprehensive.

- Though Elicit and Scopus AI include fewer studies, both tools cite a study that presents contradictory evidence (Yu, et al. (2019)) about the relationship between less sleep and weight gain, which is not included in the other two tools.

- All summaries did acknowledge the link between short sleep duration and weight gain, but only provided a surface-level summary of the studies. They lacked in-depth critical analysis on the relationships between the findings.

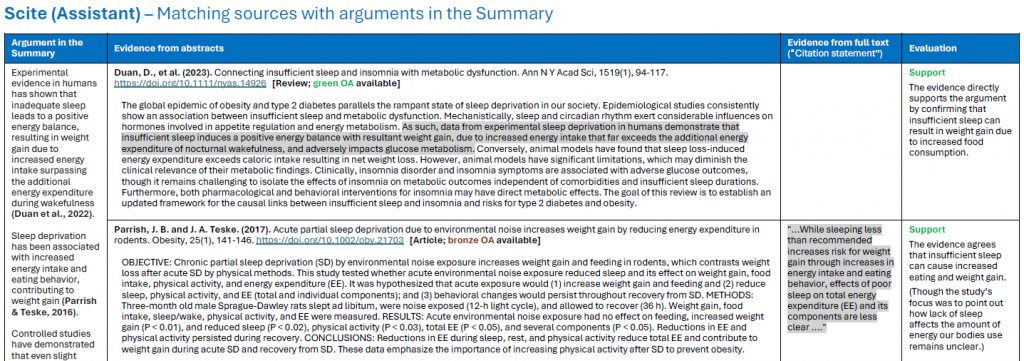

Matching of Sources

While all four AI tools can generate real citations, this does not ensure that the citations will match with the arguments generated in the review. A detailed examination of the sources has been compiled in this PDF.

Key take-aways include:

- Overall, the generated arguments were generally well-supported by the sources. Scite Assistant was able to retrieve full text of articles in addition to the abstracts, while the other three tools seem to mainly surface the abstracts.

- Sometimes these tools may inaccurately conclude based on the the introductory or general statements from the abstracts instead of specific findings or conclusions, potentially leading to biased summaries. There are also instances where these tools quote secondary sources, e.g. Consensus – Ref. 4, or where Elicit and Consensus both quote a “Note” Benson (2018) – which is only a brief summary of another research article. These could also introduce inaccuracies or bias into the summary.

- Among the four, Scopus AI seems to perform the worst. There are instances where the sources did not support the arguments (see “Mechanisms” part in the review). In another case, Scopus AI almost copied word-for-word from a source’s abstract in the summary (see “Experimental evidence” part), which could result in plagiarism. This part is aligned with the findings laid out in this post.

Discussion

In this demo, we used a well-researched topic with extensive existing literature. This may explain the relatively high accuracy of the four AI tools in generating summaries that match the sources, as they have been trained extensively on this data. However, when dealing with new or less documented subjects, these models may produce less reliable content (see a Scopus AI example and Scite Assistant example). In a recent talk at HKBU, Consensus also suggested that we should “focus on topics that are likely covered in research papers”. For new topics or more nuanced questions, keyword searches or traditional databases like Google Scholar and research databases may be more reliable.

It’s also notable that rerunning the same prompt multiple times yielded very similar results for summaries and references across all tools. This is good in terms of consistency, but it also suggests that any direct copying will be easily detected if another person uses the same prompt and also copies directly.

A comprehensive, in-depth literature review should do more than just summarize the findings of individual studies. It should critically analyze the methodologies, results, and conclusions of the studies, identifying patterns, inconsistencies, and gaps in the research. These AI generated summaries can serve as a good starting point for understanding the topic, but they should be expanded with more research and insight needed for a literature review. This also emphasizes the importance of human input in conducting literature reviews, as researchers bring their domain expertise, critical thinking skills, and ability to make connections across studies that current AI tools cannot fully replicate.

Key Take-aways

Finally, a few take-aways to share, along with an appendix that summarizes the features of the four tools in one table.

- AI tools like Scite Assistant, Elicit, Consensus, and Scopus AI can generate summaries and references quickly, but the accuracy and completeness of outputs vary.

- Generating accurate citations doesn’t ensure their alignment with the arguments in the summary.

- These tools should be used as a starting point for research and not as a replacement for a thorough literature review. The information they generate should always be verified.

Appendix. Comparing four tools in more details

Further Readings

- Guest Post – There is More to Reliable Chatbots than Providing Scientific References: The Case of ScopusAI, by The Scholarly Kitchen (Feb 2024)

- Scite assistant – academic search engine enhanced with ChatGPT, by Aaron Tay (Aug 2023)

- Using Large language models to generate and extract direct answers – More academic search systems – Scite Assistant, Scispace, Zeta Alpha, by Aaron Tay (Jul 2023)

- Are we undervaluing Open Access by not correctly factoring in the potentially huge impacts of Machine learning? — An academic librarian’s view (I), by Aaron Tay (Dec 2022)

- Q&A academic systems – Elicit.org, Scispace, Consensus.app, Scite.ai and Galactica, by Aaron Tay (Nov 2022)

– By Aster Zhao, Library

Disclaimer: The author welcomes feedback and discussion on the topic. Please do not hesitate the email the author if you notice anything inaccurate.

Views: 27092

Go Back to page Top

- Category:

- AI in Research & Learning

Tags: accuracy, AI, consensus, elicit, evaluation, literature review, research tools, scite, Scopus, trustworthy

published March 20, 2024